部署二进制k8s 这一篇着重介绍如何使用kubeasz来安装和配置所需要的k8s集群。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 root@k8s-master-11:~# apt -y install python2.7# 创建软连接

安装ansible 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 # ansible就用23来了 # 这里采用pip的方式去安装ansible,这样ansible的版本会新些 # 安装通过pip安装ansible并指定源为阿里云的 # 安装好后验证下版本号 # 创建免秘钥登录 #!/bin/bash echo "${node} 秘钥copy完成" else echo "${node} 秘钥copy失败" fi done # 安装sshpass root@ansible-23:~# apt -y install sshpass # 执行脚本分发公钥 root@ansible-23:~# bash scp.sh # 测试ssh免秘钥连接 root@ansible-23:~# ssh 172.20.17.11

下载k8s的部署工具 GitHub的项目地址 https://github.com/easzlab/kubeasz 此次kubeasz用到的版本为release=3.1.1

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 # 由于GitHub访问不了需要提前下载好对应的安装包 # 修改ezdown脚本里的docker版本和k8s的版本即可 _VER=20.10.10 KUBEASZ_ VER=3.1.1_BIN_ VER=v1.22.5_BIN_ VER=0.9.5_PKG_ VER=0.4.1_VER=v2.1.3 REGISTRY_ MIRROR=CN# 修改好后开始下载所有镜像包 # 创建集群名称 # 修改hosts文件 # 'etcd' cluster should have odd member(s) (1,3,5,...) # master node(s), set unique 'k8s_nodename' for each node # CAUTION: 'k8s_ nodename' must consist of lower case alphanumeric characters, '-' or '.',# and must start and end with an alphanumeric character _master] 172.20.17.11 k8s_ nodename='k8s-master-11'_nodename='k8s-master-12' 172.20.17.13 k8s_ nodename='k8s-master-13'# work node(s), set unique 'k8s_nodename' for each node # CAUTION: 'k8s_ nodename' must consist of lower case alphanumeric characters, '-' or '.',# and must start and end with an alphanumeric character _node] 172.20.17.14 k8s_ nodename='k8s-node-14'_nodename='k8s-node-15' [harbor] #172.20.17.8 NEW_ INSTALL=false# [optional] loadbalance for accessing k8s from outside _lb] 172.20.17.21 LB_ ROLE=master EX_APISERVER_ VIP=172.20.17.188 EX_APISERVER_ PORT=6443_ROLE=backup EX_ APISERVER_VIP=172.20.17.188 EX_ APISERVER_PORT=6443 # [optional] ntp server for the cluster [chrony] #172.20.17.1 [all:vars] # --------- Main Variables --------------- # Secure port for apiservers SECURE_ PORT="6443"# Cluster container-runtime supported: docker, containerd # if k8s version >= 1.24, docker is not supported _RUNTIME="docker" # Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn CLUSTER_ NETWORK="calico"# Service proxy mode of kube-proxy: 'iptables' or 'ipvs' _MODE="ipvs" # K8S Service CIDR, not overlap with node(host) networking SERVICE_ CIDR="10.100.0.0/16"# Cluster CIDR (Pod CIDR), not overlap with node(host) networking _CIDR="10.200.0.0/16" # NodePort Range NODE_ PORT_RANGE="30000-40000" # Cluster DNS Domain CLUSTER_ DNS_DOMAIN="dklwj.local" # Binaries Directory bin_ dir="/usr/bin"# Deploy Directory (kubeasz workspace) _dir="/etc/kubeasz" # Directory for a specific cluster cluster_ dir="{{ base_dir }}/clusters/k8s-01" # CA and other components cert/key Directory ca_ dir="/etc/kubernetes/ssl"# Default 'k8s_nodename' is empty k8s_ nodename=''# 修改config.yml ############################ # prepare ############################ # 可选离线安装系统软件包 (offline|online) _SOURCE: "online" # 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening OS_ HARDEN: false############################ # role:deploy ############################ # default: ca will expire in 100 years # default: certs issued by the ca will expire in 50 years _EXPIRY: "876000h" CERT_ EXPIRY: "438000h"# force to recreate CA and other certs, not suggested to set 'true' _CA: false # kubeconfig 配置参数 CLUSTER_ NAME: "cluster1"_NAME: "context-{{ CLUSTER_ NAME }}"# k8s version _VER: "1.25.6" # set unique 'k8s_ nodename' for each node, if not set(default:'') ip add will be used# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.', # and must start and end with an alphanumeric character (e.g. 'example.com'), # regex used for validation is '[a-z0-9 ]([-a-z0-9]*[a-z0-9] )?(\.[a-z0-9 ]([-a-z0-9]*[a-z0-9] )?)*' K8S_ NODENAME: "{%- if k8s_nodename != '' -%} \ {{ k8s_ nodename|replace('_', '-')|lower }} \ {%- else -%} \ {{ inventory_ hostname }} \ {%- endif -%}" ############################ # role:etcd ############################ # 设置不同的wal目录,可以避免磁盘io竞争,提高性能 _DATA_ DIR: "/var/lib/etcd"_WAL_ DIR: ""############################ # role:runtime [containerd,docker] ############################ # ------------------------------------------- containerd # [.]启用容器仓库镜像 _MIRROR_ REGISTRY: true# [containerd]基础容器镜像 _IMAGE: "172.20.17.24/base-image/pause:3.9" # [containerd]容器持久化存储目录 CONTAINERD_ STORAGE_DIR: "/var/lib/containerd" # ------------------------------------------- docker # [docker]容器存储目录 DOCKER_ STORAGE_DIR: "/var/lib/docker" # [docker]开启Restful API ENABLE_ REMOTE_API: false # [docker]信任的HTTP仓库 INSECURE_ REG: '["http://easzlab.io.local:5000","172.20.17.24"]'############################ # role:kube-master ############################ # k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名) _CERT_ HOSTS: - "10.1.1.1" - "k8s.easzlab.io"# node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址) # 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段 # https://github.com/coreos/flannel/issues/847 _CIDR_ LEN: 24############################ # role:kube-node ############################ # Kubelet 根目录 _ROOT_ DIR: "/var/lib/kubelet"# node节点最大pod 数 _PODS: 300 # 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量 # 数值设置详见templates/kubelet-config.yaml.j2 KUBE_ RESERVED_ENABLED: "no" # k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况; # 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2 # 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留 # 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存 SYS_ RESERVED_ENABLED: "no" ############################ # role:network [flannel,calico,cilium,kube-ovn,kube-router] ############################ # ------------------------------------------- flannel # [flannel]设置flannel 后端"host-gw","vxlan"等 FLANNEL_ BACKEND: "vxlan"_ROUTING: false # [flannel] flannel_ ver: "v0.19.2"# ------------------------------------------- calico # [calico] IPIP隧道模式可选项有: [Always, CrossSubnet, Never],跨子网可以配置为Always与CrossSubnet(公有云建议使用always比较省事,其他的话需要修改各自公有云的网络配置,具体>可以参考各个公有云说明) # 其次CrossSubnet为隧道+BGP路由混合模式可以提升网络性能,同子网配置为Never即可. _IPV4POOL_ IPIP: "Always"# [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现 _AUTODETECTION_ METHOD: "can-reach={{ groups['kube_master' ][0 ] }}"# [calico]设置calico 网络 backend: brid, vxlan, none _NETWORKING_ BACKEND: "brid"# 如果集群规模超过50个节点,建议启用该特性 _RR_ ENABLED: false# CALICO_RR_ NODES 配置route reflectors的节点,如果未设置默认使用集群master节点 # CALICO_RR_ NODES: ["192.168.1.1", "192.168.1.2"] _RR_ NODES: []# [calico]更新支持calico 版本: ["3.19", "3.23"] _ver: "v3.24.5" # [calico]calico 主版本 calico_ ver_main: "{{ calico_ ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}" # ------------------------------------------- cilium # [cilium]镜像版本 cilium_ ver: "1.12.4"_connectivity_ check: true_hubble_ enabled: false_hubble_ ui_enabled: false # ------------------------------------------- kube-ovn # [kube-ovn]选择 OVN DB and OVN Control Plane 节点,默认为第一个master节点 OVN_ DB_NODE: "{{ groups['kube_master' ][0 ] }}" # [kube-ovn]离线镜像tar包 kube_ ovn_ver: "v1.5.3" # ------------------------------------------- kube-router # [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet" OVERLAY_ TYPE: "full"# [kube-router]NetworkPolicy 支持开关 _ENABLE: true # [kube-router]kube-router 镜像版本 kube_ router_ver: "v0.3.1" busybox_ ver: "1.28.4"############################ # role:cluster-addon ############################ # coredns 自动安装 _install: "no" corednsVer: "1.9.3" ENABLE_ LOCAL_DNS_ CACHE: false# 设置 local dns cache 地址 _DNS_ CACHE: "169.254.20.10"# metric server 自动安装 _install: "no" metricsVer: "v0.5.2" # dashboard 自动安装 dashboard_ install: "no"# prometheus 自动安装 _install: "no" prom_ namespace: "monitor"_chart_ ver: "39.11.0"# nfs-provisioner 自动安装 _provisioner_ install: "no"_provisioner_ namespace: "kube-system"_provisioner_ ver: "v4.0.2"_storage_ class: "managed-nfs-storage"_server: "192.168.1.10" nfs_ path: "/data/nfs"# network-check 自动安装 _check_ enabled: false_check_ schedule: "*/5 * * * *" ############################ # role:harbor ############################ # harbor version,完整版本号 HARBOR_VER: "v2.6.3" HARBOR_DOMAIN: "harbor.easzlab.io.local" HARBOR_PATH: /var/data HARBOR_TLS_PORT: 8443 HARBOR_REGISTRY: "{{ HARBOR_DOMAIN }}:{{ HARBOR_TLS_PORT }}" # if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down' HARBOR_SELF_SIGNED_CERT: true # install extra component HARBOR_WITH_NOTARY: false HARBOR_WITH_TRIVY: false HARBOR_WITH_CHARTMUSEUM: true

初始化k8s集群 环境初始化 1 2 3 4 5 6 7 8 9 10 11 12 13 root@ansible-23:/etc/kubeasz# ./ezctl setup k8s-01 01**** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** **** ****

部署etcd 1 2 3 4 5 6 # 安装etcd

验证etcd安装

安装好后去etcd节点上验证下是否正常

1 2 3 4 5 6 7 8 9 10 root@k8s-etcd-17:~# export NODE_IPS="172.20.17.17 172.20.17.18 172.20.17.19" root@k8s-etcd-17:~# echo ${NODE_ IPS}_IPS}; do ETCDCTL_ API=3# 注:以上返回信息表⽰etcd集群运⾏正常,否则异常!

部署docker 给每个节点部署上docker服务

1 2 # 部署docker服务

验证node的docker

安装完还是老样子检查下稳妥点

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 root@k8s-node-14:~# docker version

部署master 1 2 3 4 5 6 7 8 9 10 11 root@ansible-23:/etc/kubeasz# ./ezctl setup k8s-01 04# 在master1上验证 # 在master2上验证

部署node 1 2 3 4 5 6 7 8 root@ansible-23:/etc/kubeasz# ./ezctl setup k8s-01 05# 验证node节点是否已经添加到集群中

部署网络服务calico 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 # 部署前需要修改下之前生成的两个文件 hosts、config.yml # Cluster container-runtime supported: docker, containerd _RUNTIME="docker" # Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn CLUSTER_ NETWORK="calico"# ------------------------------------------- calico # [calico]设置 CALICO_IPV4POOL_ IPIP=“off”,可以提高网络性能,条件限制详见 docs/setup/calico.md _IPV4POOL_ IPIP: "Always"# [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现 _AUTODETECTION_ METHOD: "can-reach={{ groups['kube_master' ][0 ] }}"# [calico]设置calico 网络 backend: brid, vxlan, none _NETWORKING_ BACKEND: "brid"# [calico]更新支持calico 版本: [v3.3.x] [v3.4.x] [v3.8.x] [v3.15.x] _ver: "v3.19.2" # [calico]calico 主版本 calico_ ver_main: "{{ calico_ ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}" # [calico]离线镜像tar包 calico_ offline: "calico_{{ calico_ ver }}.tar"

提前下载镜像文件

把calico所需要的镜像文件提前下载后,重新打tag成本地harbor地址后续在部署时直接从本地harbor拉取镜像即可

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 # calico-cni的镜像文件 # pod2daemon-flexvol # calico-node # calico-kube-controllers

修改calico的模板

在部署前把calico需要的镜像文件改成本地harbor的镜像,上面已经手动一个个的拉取后重新打tag成本地harbor地址

1 2 3 4 5 6 7 8 9 10 root@ansible-23:/etc/kubeasz# vim roles/calico/templates/calico-v3.19.yaml.j2 - name: install-cni image: 172.20.17.24/baseimage/calico-cni:v3.19.2 - name: flexvol-driver image: 172.20.17.24/baseimage/calico-pod2daemon-flexvol:v3.19.2 - name: calico-node image: 172.20.17.24/baseimage/calico-node:v3.19.2 - name: calico-kube-controllers image: 172.20.17.24/baseimage/calico-kube-controllers:v3.19.2 ...

部署网络calico

上面操作都修改完后,开始部署calico网络插件

1 root@ansible-23:/etc/kubeasz# ./ezctl setup k8s-01 06

验证calico

安装完还是老样子检查下稳妥点

1 2 3 4 5 6 7 8 9 10 11 12 13 14 root@k8s-master-11:~# calicoctl node status

coredns部署 k8s中如果没有装dns解析的话,它后续是解析不了域名的,这里部署采用下载二进制包解压后得到coredns的yaml文件,通过yaml来部署,部署的话 建议部署2个以上即防止服务出问题、也可以解决压力大时无法解析。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 # 上传或者下载以下这几个二进制文件包 # 解压这四个二进制包 # 进到当前目录kubernetes下cluster/addons/dns/coredns/ # 拷贝模板文件放到你指定的目录下然后修改,这里就放在/root下了 # 修改coredns.yaml文件 __DNS__ DOMAIN__ in-addr.arpa ip6.arpa# 改成你当时在初始化时定义好的域,忘记的话 可以在ansible服务器上找到/etc/kubeasz/cluster/k8s-01/hosts里CLUSTER_DNS_ DOMAIN="dklwj.local" # 这块也可以改下 你可以换成别的转换地址,也可以用默认的 # coredns镜像地址需要换成国内的或者爬梯子下载后放到你本地的harbor去后续从本地harbor拉取 # 内存资源限制 __DNS__ MEMORY__LIMIT__ # 修改k8s中dns的地址 __DNS__ SERVER__# 改完上面后你执行创建它还是起不来的在coredns1.8后需要授权发现的权限 # 创建coredns资源 # 查看coredns的pod运行情况 <none > <none > <none > <none > <none > <none > <none > <none > <none > <none > <none > <none > <none > <none > # 进入pod里ping域名看是否可以解析 # 跨namespace默认无法ping通,需要加上pod名称-名称空间 # 一般全称来ping

部署dashboard dashboard部署包托管在GitHub上,地址为:https://github.com/kubernetes/dashboard 下载地址为:https://github.com/kubernetes/dashboard/releases

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 # 下载dashboard的yaml文件 _v2.4.0.yaml root@k8s-master-11:/usr/local/src# vim dashboard_ v2.4.0.yaml # 还有service类型这默认只能内网访问需要把端口暴露出去 # 在任意一台能上网的机器上先把里面的两个镜像pull下来重新打tag成内网harbor地址,再上传至内网harbor中 # yaml文件修改好后需要创建dashboard资源出来 _v2.4.0.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created # 查看部署后dashboard信息 root@k8s-master-11:/usr/local/src# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE default net-test1 1/1 Running 0 16h kube-system calico-kube-controllers-6998dfbfdf-lnhzf 1/1 Running 0 17h kube-system calico-node-db6zj 1/1 Running 0 17h kube-system calico-node-n5dc8 1/1 Running 0 17h kube-system calico-node-pvqsf 1/1 Running 0 17h kube-system calico-node-rmzkx 1/1 Running 0 17h kube-system coredns-7b79b986c4-4blvp 1/1 Running 0 16h kubernetes-dashboard dashboard-metrics-scraper-f84d4b4b9-qbjf5 1/1 Running 0 44s kubernetes-dashboard kubernetes-dashboard-757d9965ff-p2xxl 1/1 Running 0 44s # 查看dashboard暴露出的端口信息 root@k8s-master-11:/usr/local/src# kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.200.0.1 <none > kube-system kube-dns ClusterIP 10.200.0.2 <none > kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.200.123.218 <none > kubernetes-dashboard kubernetes-dashboard NodePort 10.200.40.237 <none >

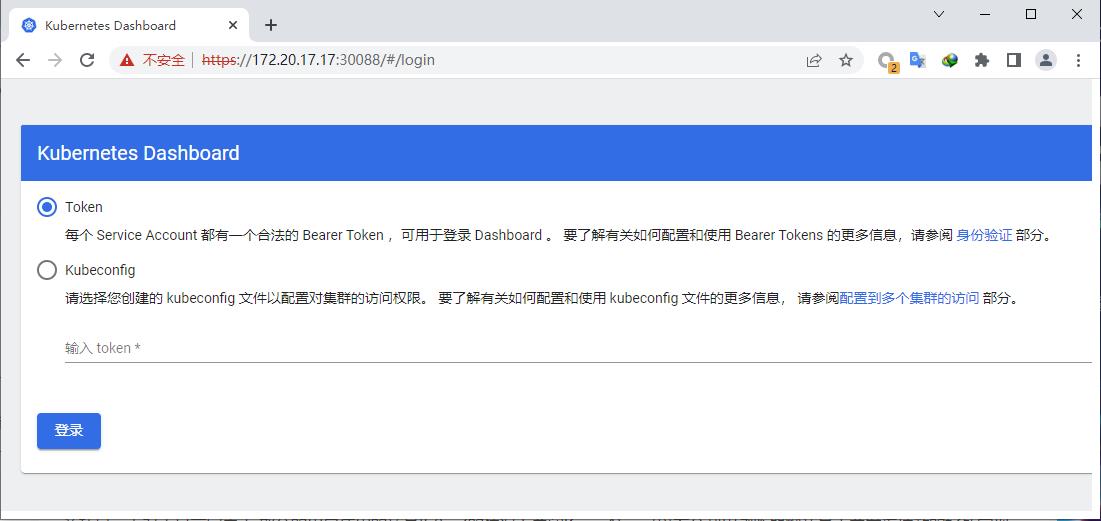

访问dashboard

部署完后,在此集群中任意一个node节点上都会暴露出一个30088的端口,也就是说只要使用了service的nodeport后它会再集群中都会暴露这个端口

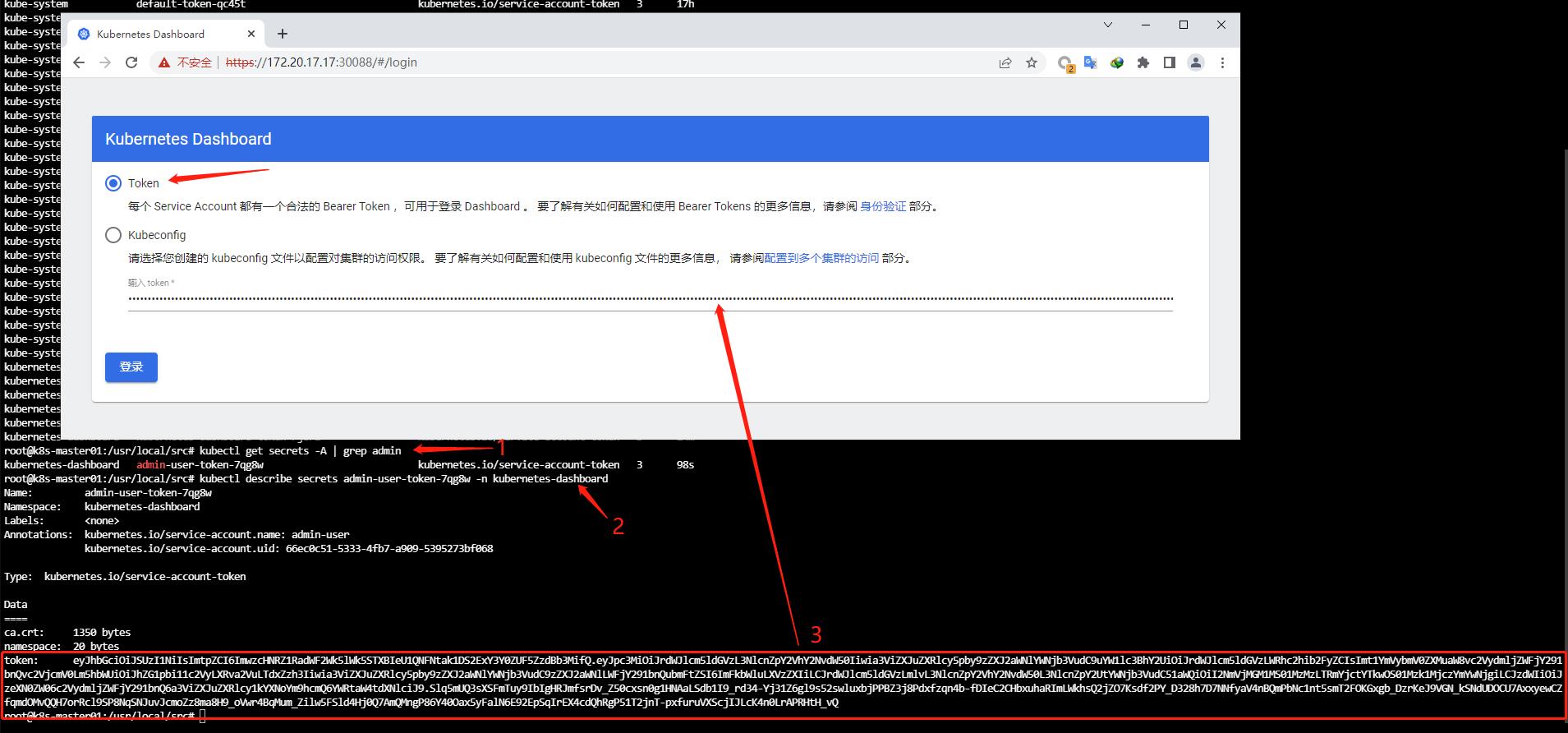

dashboard创建访问token

dashboard部署好后,还需要创建用户并授权用户能对此访问

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 # 手动创建用户授权yaml文件 - kind: ServiceAccount# 创建用户资源 # 找出刚刚创建好的admin用户的秘钥 <none > kubernetes.io/service-account.uid: 66ec0c51-5333-4fb7-a909-5395273bf068 Data ==== _Z50cxsn0g1HNAaLSdb1I9_ rd34-Yj31Z6gl9s52swluxbjPPBZ3j8Pdxfzqn4b-fDIeC2CHbxuhaRImLWkhsQ2jZO7Ksdf2PY_D328h7D7NNfyaV4nBQmPbNc1nt5smT2FOKGxgb_ DzrKeJ9VGN_kSNdUDOCU7AxxyewCZfqmdOMvQQH7orRcl9SP8NqSNJuvJcmoZz8ma8H9_ oVwr4BqMum_Zilw5FSld4Hj0Q7AmQMngP86Y40Oax5yFalN6E92EpSqIrEX4cdQhRgP51T2jnT-pxfuruVXScjIJLcK4n0LrAPRHtH_ vQ

在浏览器输入token

找出admin的token后就能在浏览器中登录访问dashboard页面

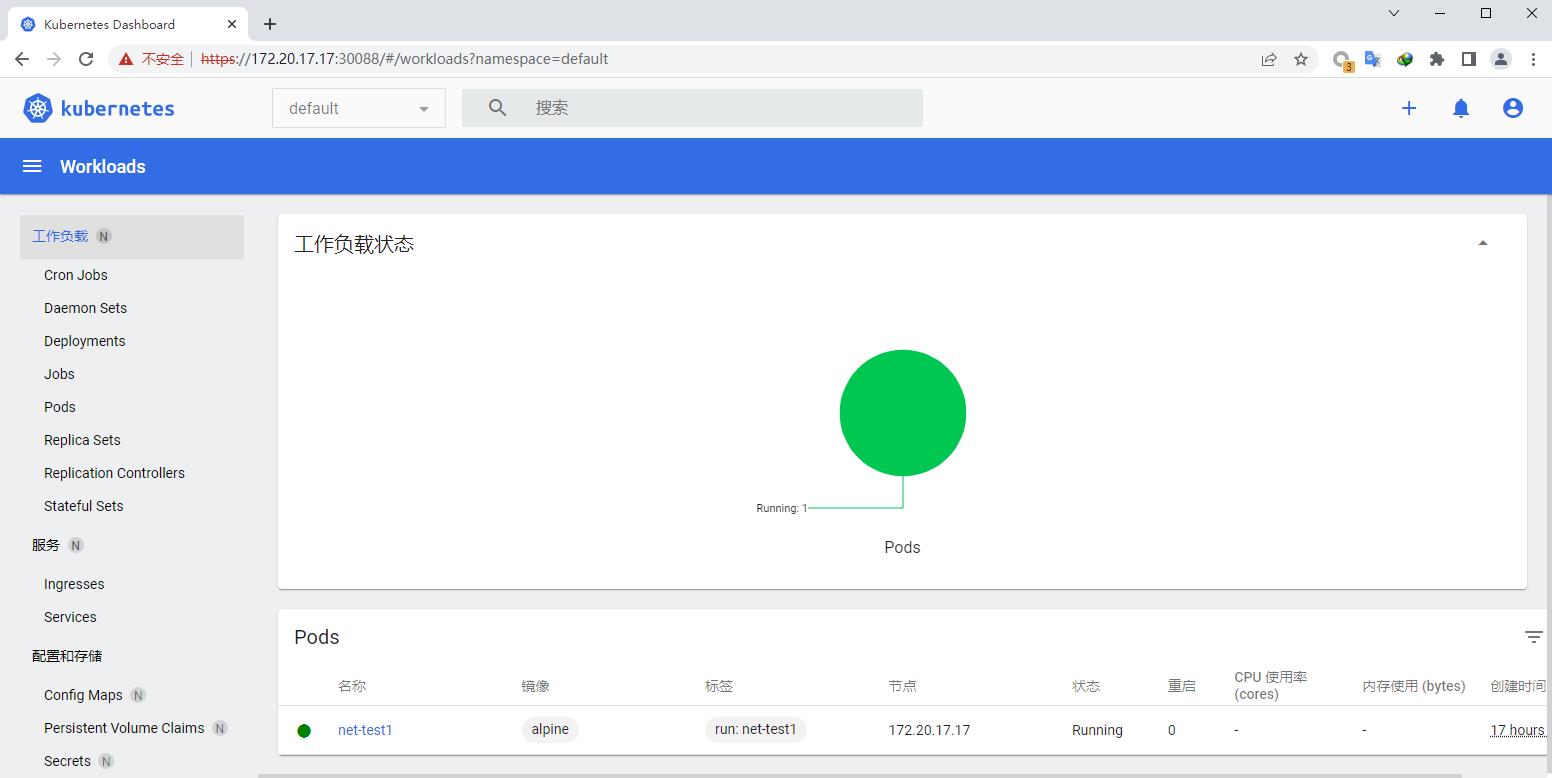

登录后界面

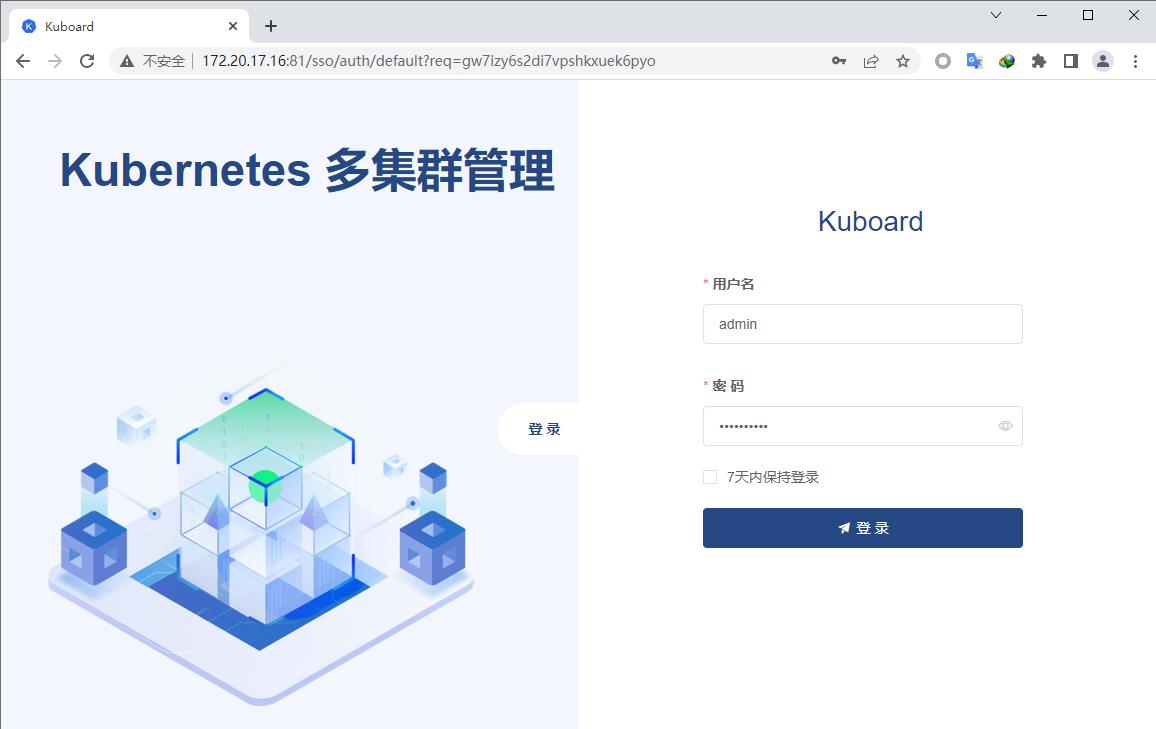

kuboard管理界面 今天给大家介绍的这款工具Kuboard,是一款免费的Kubernetes图形化管理工具,官网https://kuboard.cn/ Kuboard力图帮助用户快速在Kubernetes上落地微服务。为了达到此目标,Kuboard提供了针对上述问题的解决办法:

1.Kuboard提供Kubernetes免费安装文档、免费在线答疑,每天约有200位网友参照Kuboard提供的文档进行K8S集群安装。

1 2 3 4 5 6 7 8 9 10 11 # 部署kuboard,这里直接采用docker部署 > --restart=unless-stopped \ > --name=kuboard \ > -p 81:80/tcp \ > -p 10081:10081/tcp \ > -e KUBOARD_ENDPOINT="http://172.20.18.16:80" \ > -e KUBOARD_ AGENT_SERVER_ TCP_PORT="10081" \ > -v /root/kuboard-data:/data \ > swr.cn-east-2.myhuaweicloud.com/kuboard/kuboard:v3 0af3fe041b050a905d2d5897aaea281621b172b41a5d5a90586fc0e5f88def05

访问kuboard

访问地址为:http://172.20.17.16:81/

用户名: admin

密 码: Kuboard123

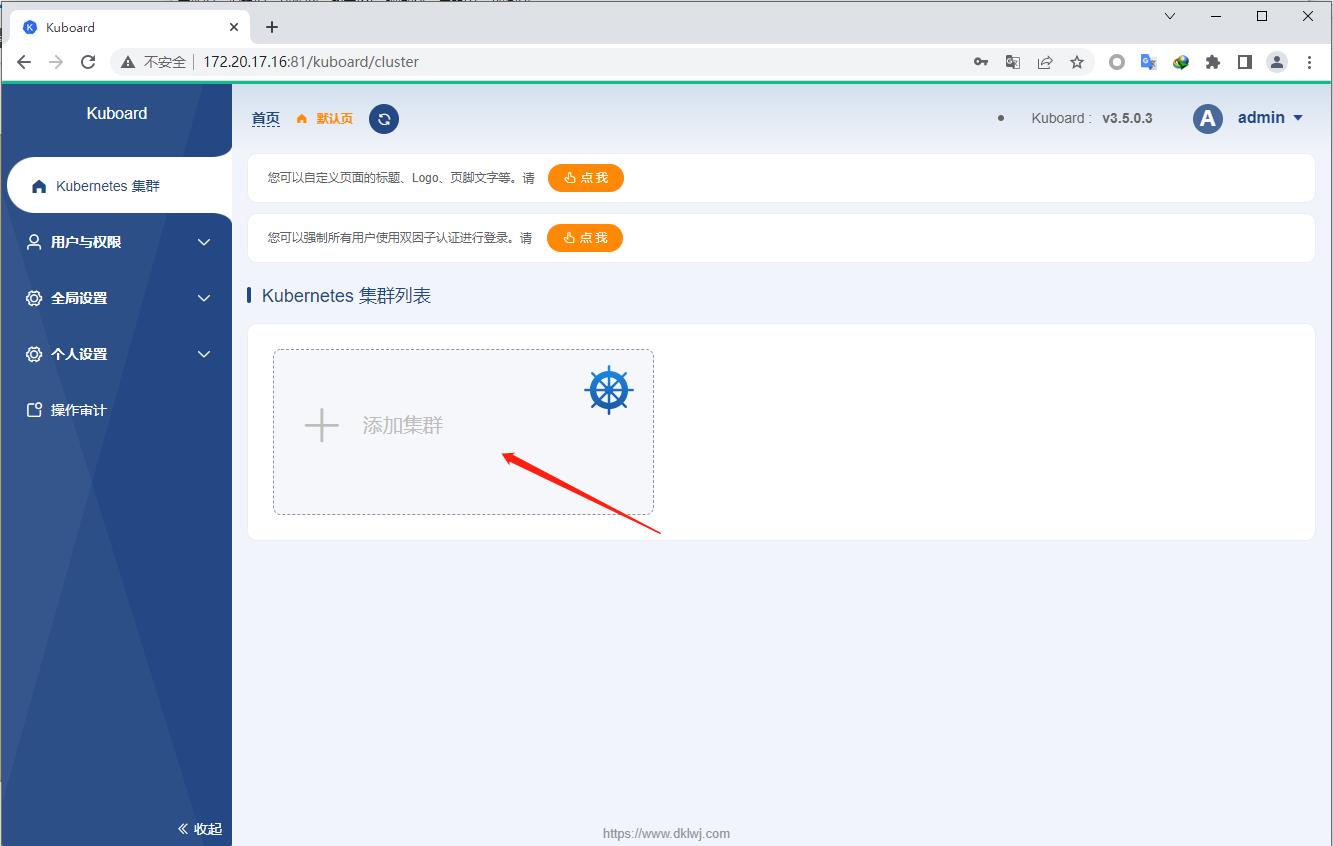

添加k8s集群

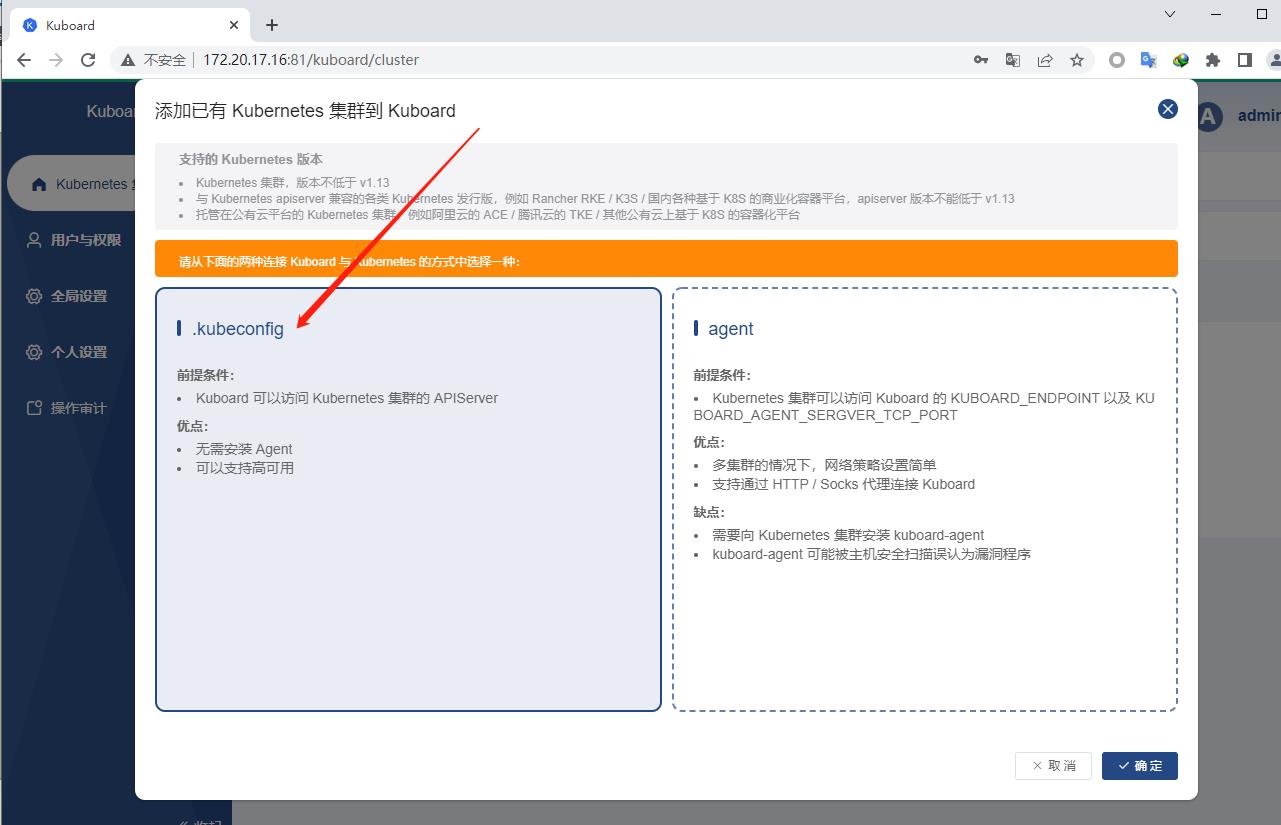

选择kubeconfig

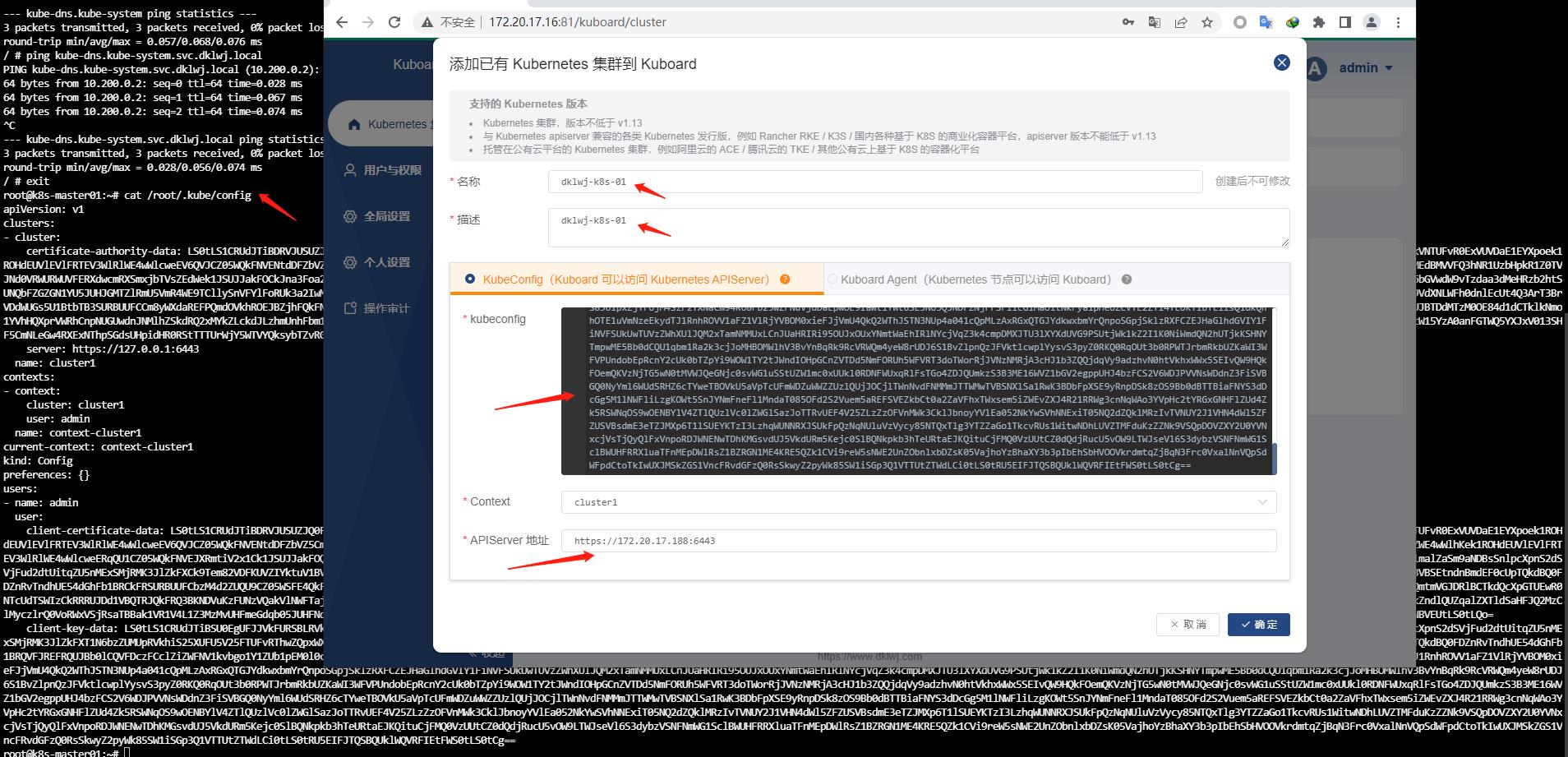

这里选择kubeconfig文件来添加,比较方便不用安装它的agent,只需要在master上把/root/.kube/config里头的内容全部复制粘贴过来即可

需要自定义你的集群名称、api-server地址那块视情况更改如有VIP的要修改成VIP的地址

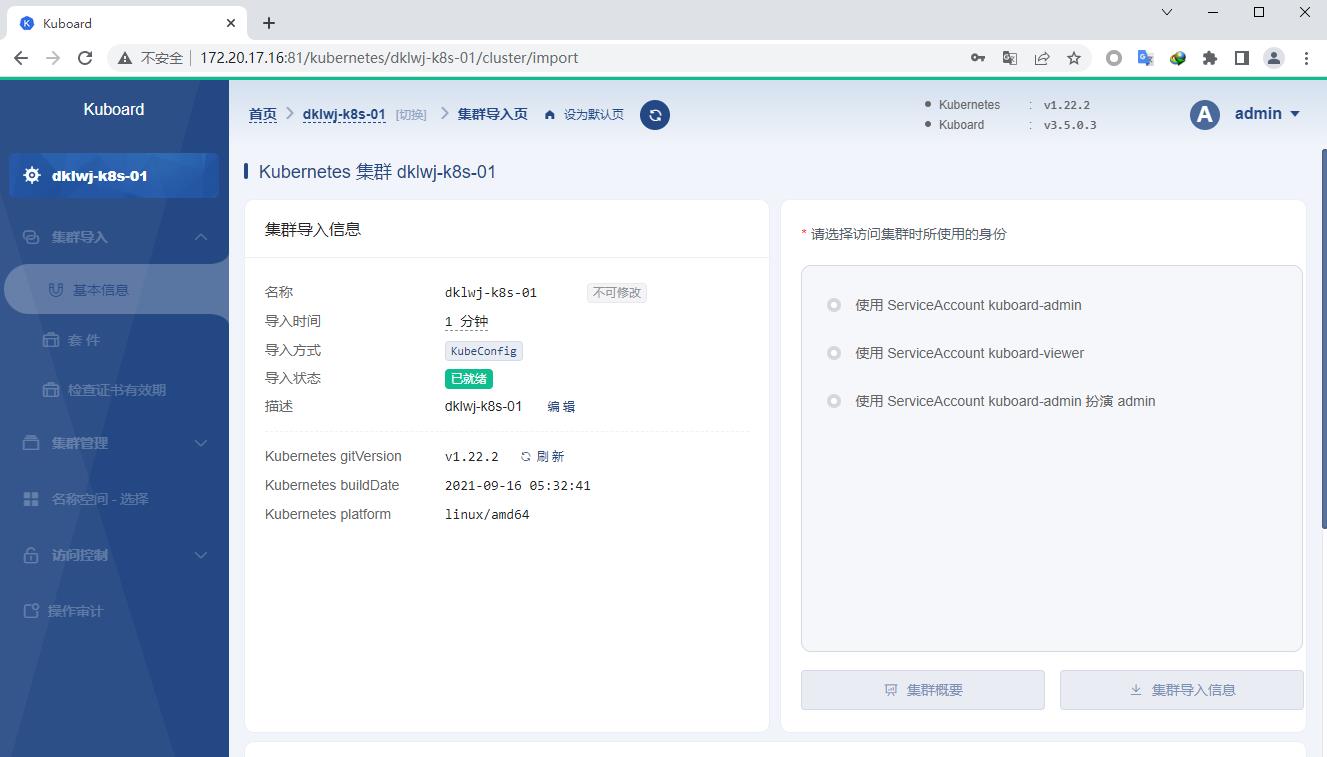

k8s集群添加完成

使用kuboard管理集群

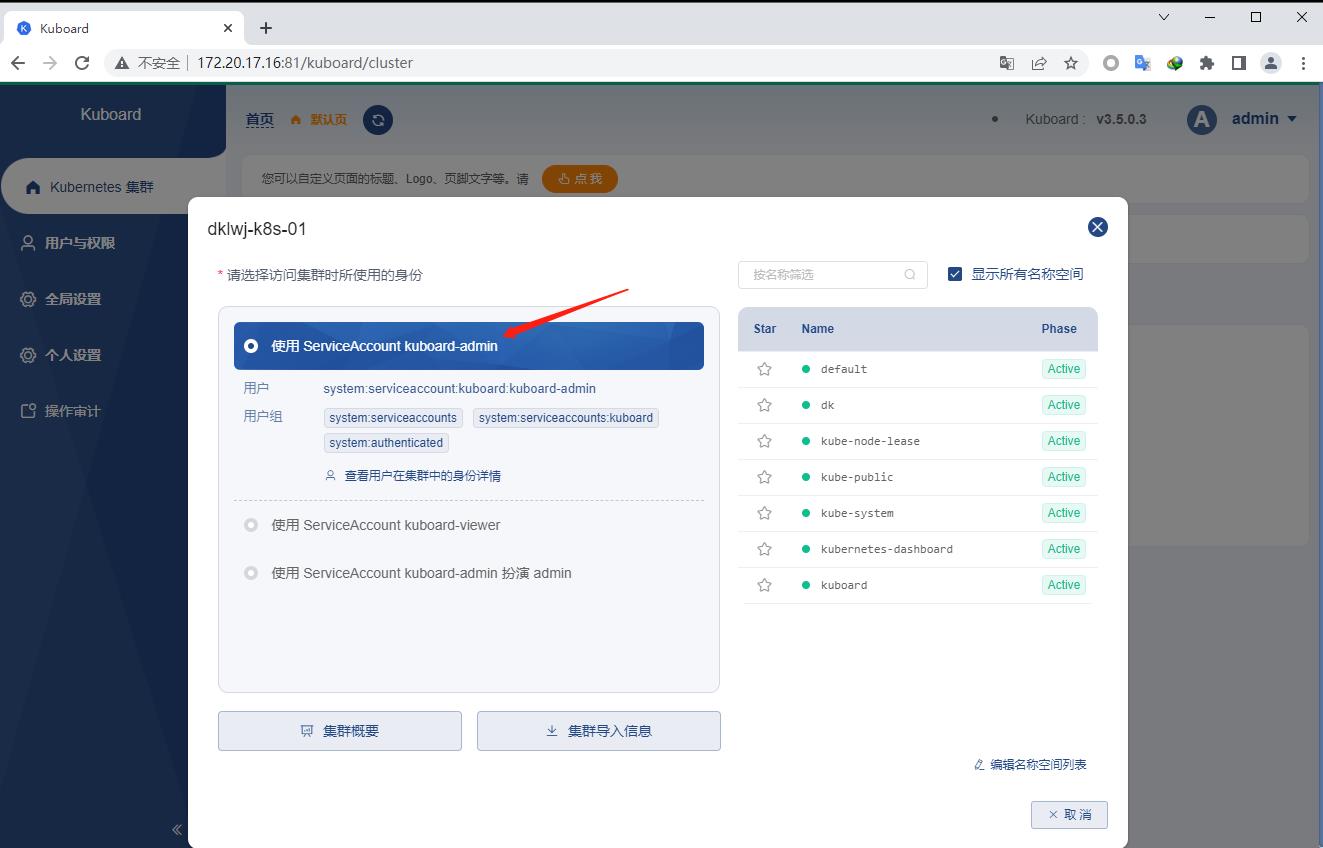

首页 –> Kubernetes集群 –>kubernetes集群列表 –> 定义好的集群

选择所使用户身份这里用的admin,也就是第一个即可,然后点击下面集群概要可以看到当前k8s集群的状况

查看集群状态

安装Metrics-server Metrics-server 需要手动安装

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 # 下载metrics-server镜像 # 传到harbor中去 # 上传它的yml文件 # 修改里面的镜像文件地址默认是k8s官网的 国内访问不了 需要手动拉取然后再推倒内网harbor上去 #image: k8s.gcr.io/metrics-server/metrics-server:v0.4.4 image: 172.20.17.24/base-image/metrics-server:v0.4.4 # 创建资源 root@k8s-master-11:/app/k8s# kubectl apply -f components-v0.4.4.yaml serviceaccount/metrics-server created clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created clusterrole.rbac.authorization.k8s.io/system:metrics-server created rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created service/metrics-server created deployment.apps/metrics-server created apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created # 查看是否成功创建 root@k8s-master-11:/app/k8s# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-8b9bbbfcb-xjv55 1/1 Running 1 (121m ago) 10d kube-system calico-node-c9tq2 1/1 Running 1 (124m ago) 10d kube-system calico-node-rd7xj 1/1 Running 1 (121m ago) 10d kube-system calico-node-rpx4p 1/1 Running 1 (124m ago) 10d kube-system calico-node-w2rh6 1/1 Running 1 (121m ago) 10d kube-system calico-node-z7p52 1/1 Running 1 (121m ago) 9d kube-system calico-node-zj57r 1/1 Running 1 (124m ago) 10d kube-system coredns-59f6db5ddb-l7xd7 1/1 Running 1 (121m ago) 9d kube-system metrics-server-58ff68569f-h4qvv 1/1 Running 0 43s kubernetes-dashboard dashboard-metrics-scraper-54b4678974-9xq6s 1/1 Running 1 (121m ago) 9d kubernetes-dashboard kubernetes-dashboard-7b5d8d5578-565qj 1/1 Running 1 (121m ago) 9d # 最终是否真正可以使用 root@k8s-master-11:/app/k8s# kubectl top node NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% 172.20.17.11 326m 8% 1690Mi 22% 172.20.17.12 253m 12% 1119Mi 66% 172.20.17.13 291m 14% 1178Mi 70% 172.20.17.14 214m 10% 1354Mi 37% 172.20.17.15 200m 10% 1368Mi 37% 172.20.17.16 210m 10% 1462Mi 40% root@k8s-master-11:/app/k8s# kubectl top pod -A NAMESPACE NAME CPU(cores) MEMORY(bytes) kube-system calico-kube-controllers-8b9bbbfcb-xjv55 4m 26Mi kube-system calico-node-c9tq2 68m 117Mi kube-system calico-node-rd7xj 61m 108Mi kube-system calico-node-rpx4p 93m 106Mi kube-system calico-node-w2rh6 63m 108Mi kube-system calico-node-z7p52 73m 108Mi kube-system calico-node-zj57r 53m 104Mi kube-system coredns-59f6db5ddb-l7xd7 5m 14Mi kube-system metrics-server-58ff68569f-h4qvv 7m 13Mi kubernetes-dashboard dashboard-metrics-scraper-54b4678974-9xq6s 2m 10Mi kubernetes-dashboard kubernetes-dashboard-7b5d8d5578-565qj 1m 15Mi

敬请期待后续内容…..